Ceph : «» SSD, «» — HDD. , . , .

, « ». Ceph «». , 2021. :

(HDD/SSD) , . , , SSD, SSD, — HDD.

, , , . , , SAS- 15000 RPS, — . «» , — «».

, .

CRUSH-: root — , 3 - OSD. - . , .

, RGV , .

Ceph

Ceph : « » « , ». Ceph — . , - OSD, object stores.

, . : « , » — - . .

Ceph . — , , . «» . , SSD, 60- — , OSD .

Ceph . , 3 , . Ceph . , CRUSH, , . .

:

- ;

- , - ( );

- OSD — root’;

- root’ ;

- root’.

: , , . .

:

ceph osd crush add-bucket {} {}

node 4 host.

ceph osd crush add-bucket node 4 host

, .

root. , .

ceph osd crush add-bucket new_root root

root , host — .

, , host root. , node4 root.

ceph osd crush move node4 root=new_root

:

, OSD .

( “vdb”), OSD.

:

ceph-volume lvm zap /dev/vdb

. , OSD.

sudo -u ceph auth get client.bootstrap-osd > /var/lib/ceph/bootstrap-osd/ceph.keyring

OSD:

ceph-volume lvm create --data /dev/vdb

OSD CRUSH-: CRUSH-. OSD, CRUSH . , root’, , .

OSD . : ( — 9). - OSD, OSD .

, . , OSD , .

:

ceph osd df tree

OSD , (placement groups, PGS).

OSD placement groups, . root’ — . .

Placement groups , root’ , .

CRUSH- . — .

— , , - . .

root’ :

ceph osd crush rule create-simple new_root_rule new_root host

new_root_rule

— ,

new_root

— root,

host

— , , .

host

datacenter

, -: .

, .

ceph osd pool create new_root_pool1 32 32 created new_root_rule

(new_root_rule

) PGS: — PGS, — (32 32

). Ceph placement groups . , 128 PGS, 32. 32 32 .

new_root , . . , . root’, .

OSD 32 placement groups, .

undersized inactive.

Placement group , . , Ceph “osd_pool_default_size”, 3. 3.

, min_size

.

eph osd pool set new_root_pool1 min_size 1

:

eph osd pool set new_root_pool1 size 1

replicated size 1.

ceph -s

, placement groups inactive undersized, . , : . .

OSD , ( , , , - ; , Ceph ).

, . - , .

: , . , -, , .

Ceph « ». SSD , SSD, HDD. . Ceph , HDD SSD, .

Ceph , rotational (). , rotational, HDD, rotational — SSD.

, , rotational, HDD.

SSD , HDD SSD.

SSD .

HDD, :

eph osd crush rm-device-class osd.0 osd.2 osd.4

HDD . : , . , , . , crush-, HDD SSD . .

HDD SSD:

eph osd crush set-device-class SSD osd.0 osd.2 osd.4

:

OSD.

SSD . . :

ceph osd crush rule ls

replicated_rule

— .

new_root_rule

— root, .

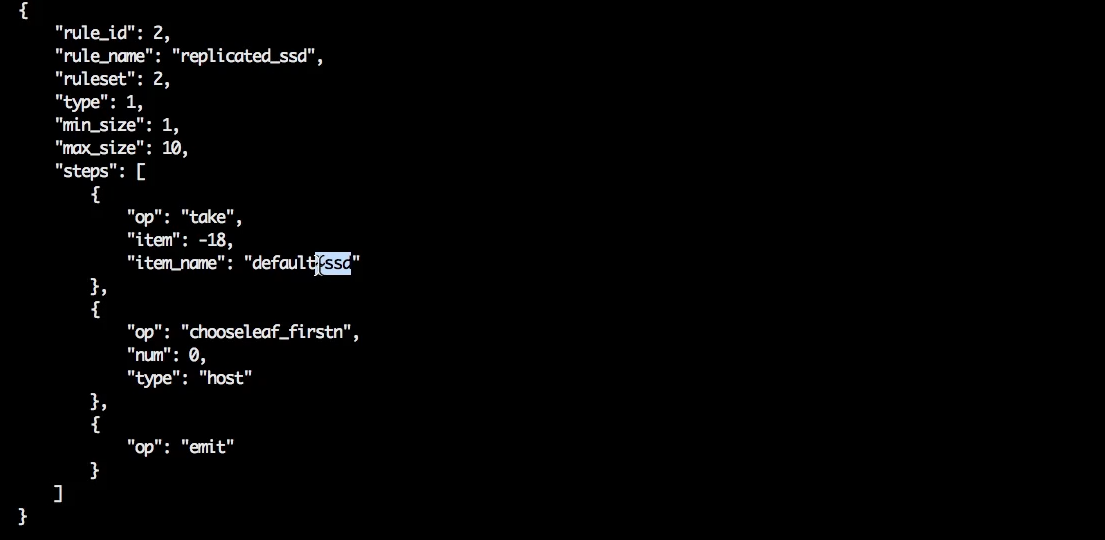

C , SSD. , , create-simple

create-replicated

.

ceph osd crush rule create-replicated replicated_SSD default host ssd

replicated_ssd

— .

default

— root’.

host

— ( ).

ssd

— (SSD HDD).

.

, erasure-coding.

erasure-code

erasure coding . CRASH-, erasure code . , .

ceph osd erasure-code-profile set ec21 k=2 m=1 crush-failure-domain=host crush-device-class=SSD

ec21

— .

host

— .

SSD

— .

CRASH- . :

- , CRASH ,

- erasure-coding, erasure-coding .

replicated crush rule, . erasure-coding , , “k” “m”.

:

ceph osd erasure-code-profile get ec21

:

ceph osd pool create ec1 16 erasure ec21

erasure-code SSD , .

replicated

CRUSH- .

( ceph df

) rgw.meta, SSD.

SSD:

ceph osd pool set fs1_meta crush_rule replicated_SSD

fs1_meta

— .

replicated_SSD

— .

rebalance: , , SSD.

: SSD, HDD. SSD placement groups , . , HDD. , , , rebalance.

HDD :

ceph osd pool set device_health_metrics crush_rule replicated_hdd ceph osd pool set .rgw.root crush_rule replicated_SSD ceph osd pool set default.rgw.log crush_rule replicated_hdd

“replicated_rule

”.

ceph osd crush rule rm replicated_rule

, — “is in use”.

, :

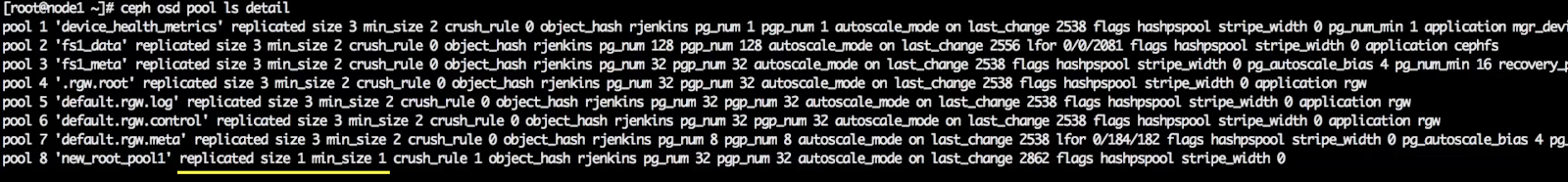

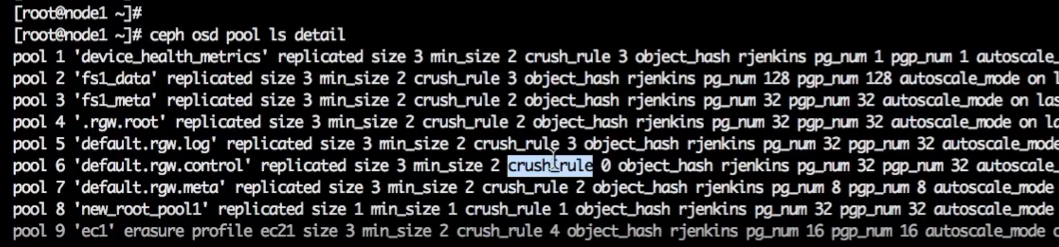

ceph osd pool ls detail

“crush_rule”.

crush_rule 0 (. . 0). , , :

ceph osd pool set default.rgw.control crush_rule replicated_hdd

:

ceph osd crush rule rm replicated_rule

!

, placement groups

( ID). placement groups . , ec1 SSD.

ceph df

, placement groups. — 9.

ceph pg dump | grep ^9

placement groups ec1. OSD, SSD — 0, 2 4. .